Why you like or will like the Vision Pro headset

I initially met Apple's progressive design and tech changes over the past 6-7 years with skepticism (expect M1), I now concede that their steady advancements in display tech, cameras, and material innovation have paid off. These elements have come together to create the Vision Pro headset.

Cognetics & AR

Cognetics is the study of how human cognition interacts with tools or systems, particularly in the context of human-computer interaction. We've evolved to process and interpret information from our surroundings and interact with our environment intuitively. AR technology like eye sight augments this ability by introducing digital information into our physical world in a way that aligns with our brain's cognitive processes. Our brain is highly adept at integrating information from multiple senses to create a coherent perception of reality. In VR, this integration process is disrupted, which can cause cognitive dissonance, confusion, and even motion sickness.

"AR technology like eye sight augments this ability by introducing digital information into our physical world in a way that aligns with our brain's cognitive processes."

Built for personal Experience:

The current AR tech isn't rooted in mobile computing, but rather, it's aimed at enhancing personal computing and individual spaces. Apple's CEO, Tim Cook, encapsulates this notion perfectly by stating, "AR is cool because it adds magic to our individual realities, enriching our personal lives and our ability to communicate and collaborate with the specific people we choose to engage with." Contrary to the mainstream idea of "The Metaverse," where everyone shares the same reality, Apple's vision suggests a smaller, personalized virtual world that is inclusive only of those relevant to us.

"Apple's vision suggests a smaller, personalized virtual world that is inclusive only of those relevant to us."

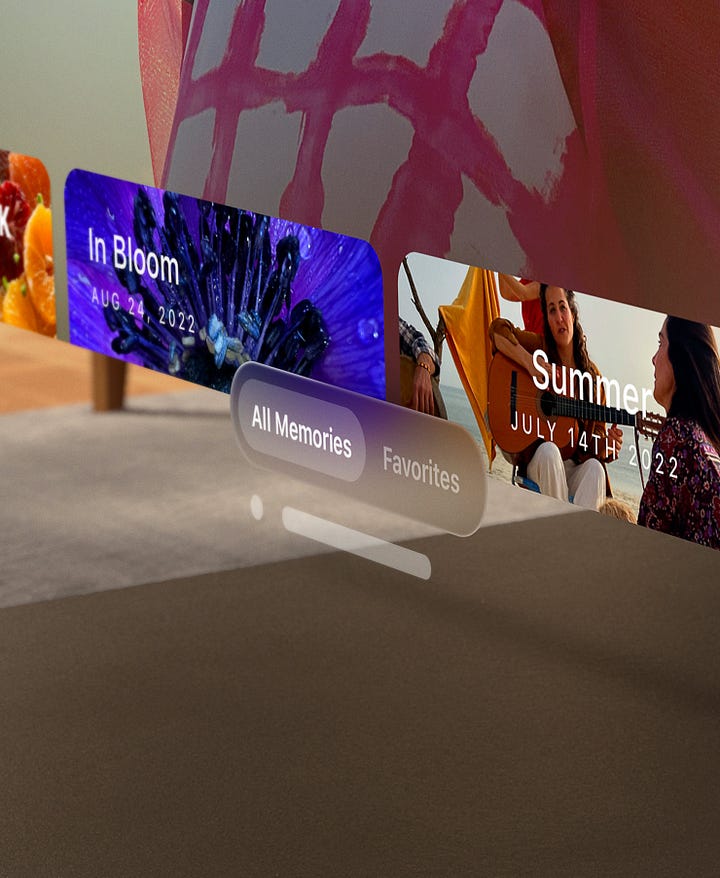

To better appreciate this approach, I draw inspiration from the PPP rule coined by Tamil filmmaker Selvaraghavan. This framework outlines the Private (known only to you), Personal (known to you and your close ones), and Public (known to your wider circle) facets of an individual's life to understand their needs and intent. Apple's demonstration of Vision Pro reflects this principle, focusing on private and personal experiences such as work, entertainment, social capture, and remote work collaboration in a personal space.

As for the concern about a tethered power supply, it's worth noting that this product is envisioned to be used within personal controlled spaces where mobility is minimal, hence rendering this concern largely irrelevant. From an industrial designer's viewpoint, it's certainly way more mobile than setups like the PS5.

Harnessing Locus of Attention and Achieving State of Flow

"Locus of attention" signifies the point of focus or the object of a person's concentration. This is where a person's mental energy is directed towards, be it a task, a thought, or a sensory input like sound or an image.

For instance, while engrossed in a book, a falling lamp might distract your attention and your focus shifts from the book to the background sound. Apple demonstrated this masterfully in their demo: when someone enters your field of view while you're immersed in the headset, the interface intelligently redirects your attention to them, mimicking your natural response. This is a product that builds on existing human behavior, and Apple deserves appreciation for that. And adaptive sound will only enhance this interaction pattern.

Another example is the Eye-tracking technology that amplifies this "locus of attention" approach. It is a proxy for user intent, selecting relevant UI elements or information based on where you're looking.

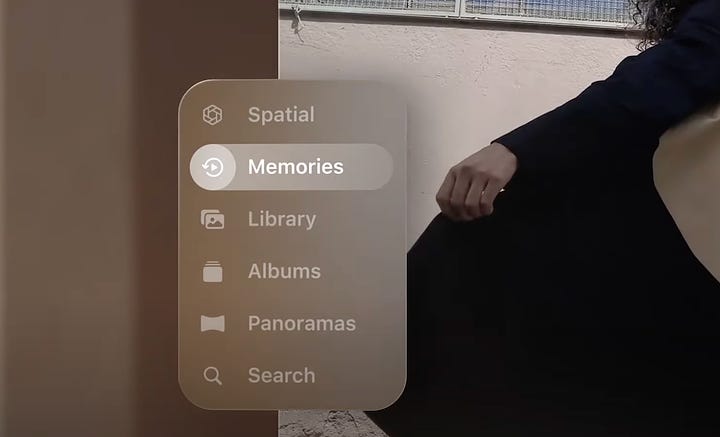

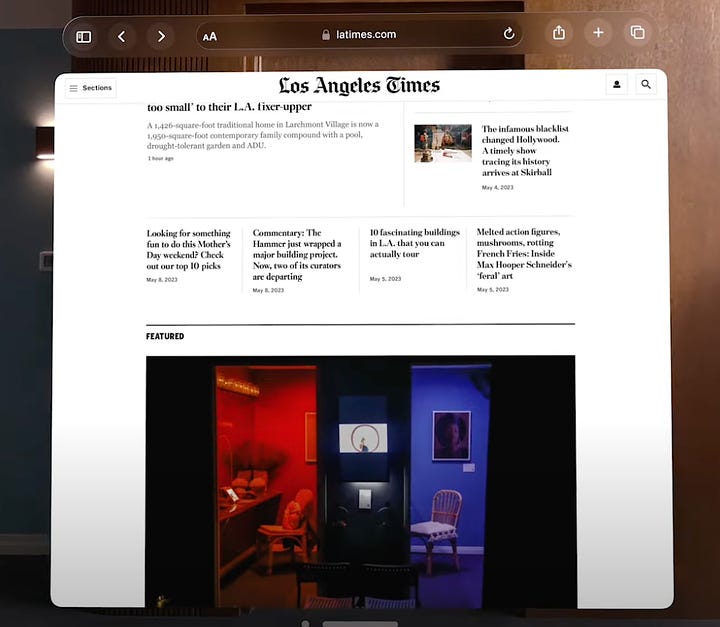

Users do not care what's inside the headset as long as it does what they need. What users want I convenience and results. But all they see is the interface. As far the customer is concerned the interface is the product. I don't know how many people paid attention to the human interface head section of the keynote. All interface and interaction design decisions resulted in ambient affordances and context-aware interactions.

"Eye-tracking technology amplifies this 'locus of attention' approach. It is a proxy for user intent, selecting relevant UI elements or information based on where you're looking."

These minute interactions cumulatively establish an immersive flow state, distinct from the overstimulation flow state of social media scrolling. Immerse interaction aims to distribute sensory engagement and put your in a state of flow rather than overwhelm it, thereby creating a balanced immersive experience.

Interaction Augmentation through Soundscapes

A specific detail in the Vision Pro demo that caught my eye was the use of Apple's distinct soundscapes (ringtones and feedback sounds) from iOS, iPad OS, and MacOS. This subtle integration is a testament to Apple's unique position as a tech company with a comprehensive catalog of hardware and software platforms, each equipped with their own unique and familiar sound bites.

Unlike other companies, Apple has the advantage of providing a sense of familiarity within the Vision Pro's user interface through these soundscapes. Even though Meta could commission someone as renowned as Hans Zimmer to create a new soundscape for Quest Pro, it is the familiarity of Apple's sound bites that eases the adoption of new technology like the Vision Pro.

By strategically incorporating these auditory elements, Apple ensures that the user experience of the Vision Pro is immersive, familiar, and far from alienating, providing a comfort level that's hard to match.

Final thoughts

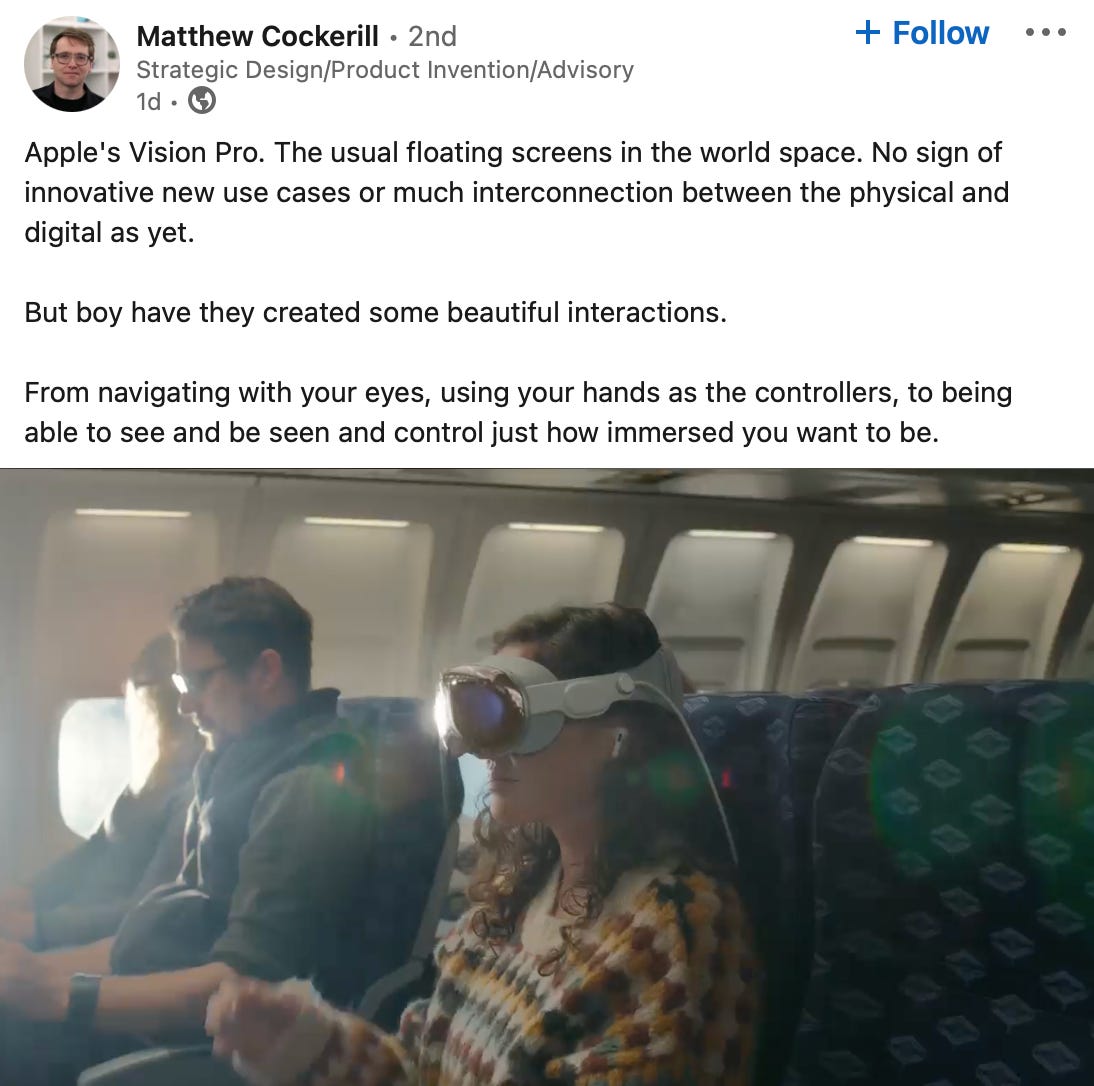

As I wrap up, I want to emphasize that I've tried to present multiple reasons from both an industrial and interaction design perspective. It's worth acknowledging a thought-provoking post I recently came across from Matthew Cockerill, ex-Creative Director at Seymourpowell, while penning this article. Several people have raised concerns about the lack of obvious use-cases for the Vision Pro.

However, as Matthew also points out, Apple has thoughtfully crafted and refined interactions unlike anything we've seen before. These interactions will pave the way for developers and creators to build the 'killer app' that could push the Vision Pro headset into the mainstream.

Much like how the iPhone's multi-touch interface opened a realm of possibilities, the Vision Pro's integration of eye-tracking and gesture control promises a whole new frontier of novel, immersive experiences.